Background for VAEs

Variational autoencoders allow for the generation of images that are statistically indistinguishable from examples in the training set. They are (in this context) an example of unsupervised learning, as they look to understand the underlying patterns in data, rather than the mapping of figures to labels. The variational autoencoder consists of a neural network used to encode the data, a latent space of spherical gaussians to allow for the determination of the most likely underlying patterns, and a decoder neural network that tries to reconstruct the original data during training. The key here is that I can splice the AI down the middle and fire artificial latent variables, with the hopes that I can make new images that appear to have similar patterns and features as the training data, and yet cannot be found in the training set.

Why Characters?

Characters, such as letters, the alphabet, and logographs such as Chinese characters follow characteristic patterns of edges, curves, and straight edges. They often seek to have empty spaces in the middle, and a lot of edges so they are discernablediscernable. I am curious to see if I can make symbols that look like reasonable letters, as it may even help my understanding of some of the patterns at play.

Setting up and running the VAE

I first generated a dataset of characters without rotation (10,000 256x256 PNGs) and trained a VAE on these. Examples of the training data are shown below.

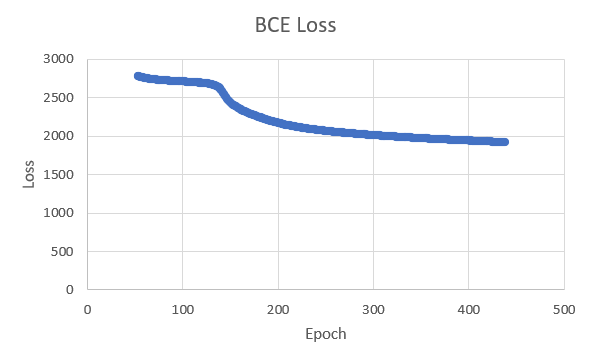

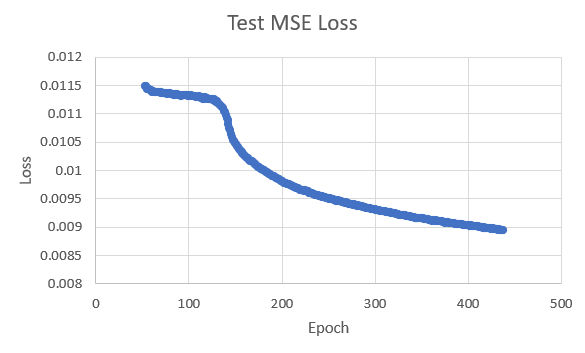

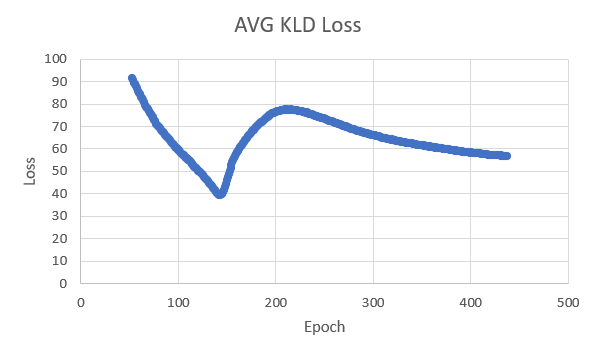

The results ended up being low quality and the training data only made use of a few of the latent variables of the 128 I provided. The graphs below show signs of memorization/weak learning as the KLD decreases with time, and the test and reconstruction loss appear asymptotic.

Results of the low quality model are shown below.

To improve the quality of the model, I added random rotations to the images so that it would see more diversity in the patterns, and better be able to understand the core abstractions. I also used only 27 latent variables. Examples of this new data are shown below.

Promising early results can be observed below.

A journey through the latent hyperspace from [0, 0, ..., 0] to [1, 1, ..., 1] yields the following gif.

After several hours of training, results reflect successful training.

After having trained for around 18 hours, the mean squared error with respect to the test data leveled off. This signifies that the chances of memorization moving foward are high, which led me to stop the training. I was very satisfied with the results.

Next, I used Chinese symbols to train the model instead to visualize the difference in the abstractions. Chinese symbols are more busy and jagged than the English alphabet so these concepts should shine through. Examples of the training set are shown below.

Running the VAE with the same hyperparameters as last time, the following showcases some examples of the output.

General Adversarial Networks Explination

Now that the general abilities of a VAE are categorized for this example, it is time to try another architecture: General adversarial networks. This type of generative aritifical intellegence borrows the concept of mimicry from evolutionary biology. It is common to see that insects will seek to look like venomous/poisonous counterparts. Animals that eat these insects must, to survive and reproduce, differentiate these insects from those that are actually venomous/poisonous while the harmless insect must try to decieve the animal into thinking it will cause harm to consume it. The result of this evolutionary arms race is an equilibrium with the bug being very similar in looks to the veomous/poisonous bug, while the animal becomes rather effective at detecting any differences. This can lead to generative behaviour, as the insect can capture fundemental abstractions, such as patterns, size, shape, color, and movement styles and even ensure that these abstractions vary in a gaussian manner with similar deviation. If the deceptive insect always had the same exact pattern, which could show up in the actual poisonous insect, but doesn't often show up, the animal would be tempted to take the risk with probable success. A general adversarial network (GAN) seeks to utilize a very similar setup, with a neural network seeking to generate images that could reasonably be found in the training set (the generator), and another seperate neural network that seeks to classify which images are in the training set and which are generated by the generator. The loss function of each is minimized by outcompeting the other, making this a zero sum game. With my current understanding, this seems quite analysis to the use of KLD loss to force coherent but varied abstractions.

Early Results

I adjusted the hyperparameters to ensure that the discriminator would not competely demolish the generator (disallowing for a sufficient gradient for the generator to improve). The early results, after only an hour of training, were incredibly shocking.

Succsessfully Creating AGI (Artificial, Generative Incompetence)

Using my understanding of evolutionary biology, it is clearly necessary to introduce aspects of the three-body problem to create a more interesting and exhaustive system. That is, I must have at least a third entity with which the other two experience evolutionary pressure against to introduce a system more likely to produce powerful results. To do this, I decided to go with 4 total since it only has the disadvantage of slightly slowing the training time. I used 2 generators and 2 discriminators. Each generator incorporated both discriminators to determine the loss function, and each discriminator incorporated both generators in their loss function. In this manner, the generator indirectly experiences pressure from the other generator through the skill it contributed to the discriminators, and the same is the case with the discriminators. I also introduced a key strategy: The Icarus Burner. If any of the four achieves a loss under an extremely low threshold, it is killed, and respawns as the other of its type. After respawning, it continues training, and deviates randomly from the other of its type, allowing for the exploration of better parameters.

After many attempts and revisions, I have found this approach to be too difficult to execute given my current skill and knowledge. The idea remains likely feasible, but mode collapse and unstable training resulted in every attempt I have made, despite counter measures such as introducing a gradient penalty, and a diversity penalty. I will likely revisit this strategy later when I am more capable, but for now I will move on to other projects where I can get more rapid feedback cycles to improve faster.